erpc

erpc erpc

erpcOpenfort allows users to have and use wallets across a wide set of chains. By migrating from an in-house JSON-RPC management system to eRPC, Openfort eliminated infrastructure bottlenecks, stabilized CPU usage, and achieved consistent performance across their multi-chain infrastructure.

This case study is based on the original article published by Openfort. Read the full article on their blog

Openfort enables users to manage wallets across multiple blockchain networks—a number that continues to grow. Managing JSON-RPC operations for this many chains requires a robust, unified infrastructure.

Our JSON-RPC management system needed to support:

We built a Go-based solution leveraging its concurrency model and async I/O capabilities. The system used Redis for caching and Elasticsearch for upstream selection. It performed well and scaled horizontally, but as our user base grew, Elasticsearch began consuming excessive resources during high-load periods, creating CPU spikes that threatened other services.

We considered several approaches to address the Elasticsearch bottleneck:

None of these solutions were ideal. We decided to explore alternatives that could eliminate our Elasticsearch dependency entirely.

eRPC emerged as a strong candidate, supporting all our existing requirements plus features we had planned to build. Key advantages included:

Additional features that enhanced our infrastructure:

We adopted a phased approach, starting with a single chain and a few upstreams to validate behavior. After confirming standard and ERC-4337 bundler calls worked correctly, we progressively added chains and upstreams until reaching feature parity with our in-house system.

During migration, we contributed upstream fixes for Pimlico providers and JSON-RPC encoding. We ran eRPC alongside our existing solution during a trial period, using this time to optimize configurations, tune upstream settings, and properly configure caching policies.

Deployment was straightforward thanks to eRPC's documentation and public Kubernetes configuration examples.

The eRPC team was responsive through community channels and GitHub. Our main challenge involved understanding failsafe upstream behavior.

We expected failsafe upstreams to be tried after all primary upstreams failed. For example, with upstreams A, B, and C (C marked as failsafe), we expected the sequence: try A → fails → try B → fails → try C. Instead, eRPC would give up after A and B without attempting C.

The issue: failsafe upstreams only activate when primary upstreams are marked "unhealthy," which requires multiple failures. During the threshold period, both A and B could fail without triggering the failsafe.

Solution: We configured failsafe upstreams as normal upstreams with a selection policy prioritizing low request counts. This ensures they're tried last when other upstreams are healthy, as higher usage decreases their priority.

eRPC has significantly improved our infrastructure stability and scalability:

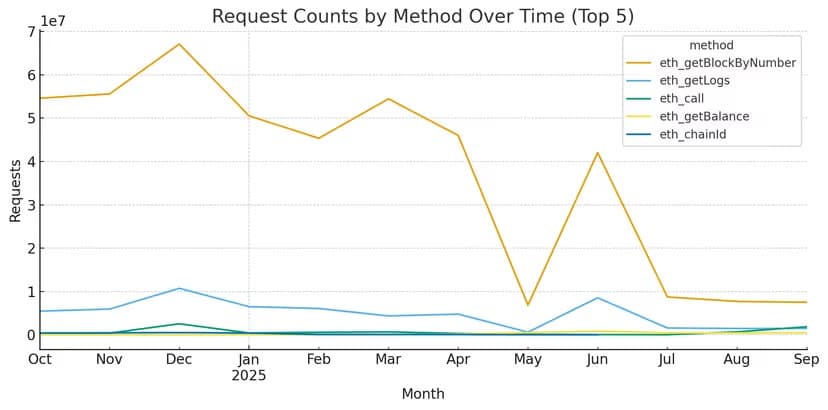

After deploying eRPC in June 2025, request volumes stabilized with: